I guess I don’t have to worry about my job going away quite yet. This is what Twitter’s AI thingy thinks is currently happening in the industry I work in.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

D&D General D&D AI Fail

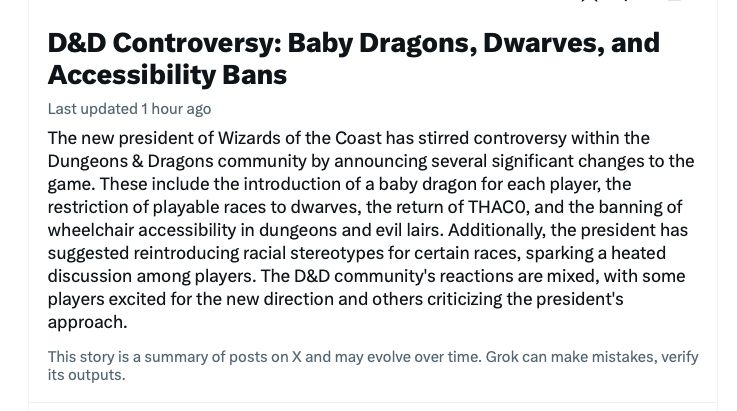

Twitter thinks there's a new WotC president who will give you a baby dragon.

Morrus is the owner of EN World and EN Publishing, creator of the ENnies, creator of the What's OLD is NEW (WOIN), Simply6, and Awfully Cheerful Engine game systems, publisher of Level Up: Advanced 5th Edition, and co-host of the weekly Morrus' Unofficial Tabletop RPG Talk podcast. He has been a game publisher and RPG community administrator for over 20 years, and has been reporting on TTRPG and D&D news for over two decades. He is also on the socials.

But seriously, no it's not. It's Twitter's 'Grok' bot which claims to summarise news topics.But seriously, that's a humor piece written by a human, or at least cleaned up by one.

Eyes of Nine

Everything's Fine

I mean, I'd like to play the dwarf subrace called "elf", who tend to be taller, thinner, less hirsute, and pointier ears (of course, not all dwarf-elfs etc etc). My baby dragon I know will approve

Yaarel

He Mage

That is the problem in a nutshell.The AI seems to thing mass tweets means truth.

Currently, AI cant tell the difference between "trending" versus true.

Queer Venger

Dungeon Master is my Daddy

your not out of the woods yet, ignorant people will still consume this tripe and believe it to be realI guess I don’t have to worry about my job going away quite yet. This is what Twitter’s AI thingy thinks is currently happening in the industry I work in.

View attachment 358923

Xethreau

Josh Gentry - Author, Minister in Training

X markets Grok as a "humorous assistant." About X Premium

I wonder what the punch line is supposed to be exactly.

I wonder what the punch line is supposed to be exactly.

Whizbang Dustyboots

Gnometown Hero

Don't knock all-dwarves until you've tried it.

EzekielRaiden

Follower of the Way

Because it can't. It is physically incapable of doing so, and unless a radical new development occurs, this will not, cannot change.That is the problem in a nutshell.

Currently, AI cant tell the difference between "trending" versus true.

Whether or not something is factually true is part of semantic content. The meaning of the statement. LLMs and (to the best of my knowledge) all other current "AIs" have no ability whatsoever to interact with or process semantic content. They can address syntax, which can be very powerful and do some very interesting things,* but it cannot even in principle address purely semantic content like truth-value. As some researchers have put it, AIs are "confidently incorrect."

The only way to teach an AI how to avoid this would be to train it to only truly listen to trusted sources, and then it would only be as reliable as the sources it drew upon—and would have some issues if those sources are too few, as it might not have enough training data to spit out meaningful results. In theory though, you could make one designed to collate and summarize existing news reports.

*E.g. I recently learned that in the high-dimensional vector space of the tokens for GPT, if you take the token vector for "king" and add to it the vector that points from the token "male" to the token "female," you actually get relatively close to the token for "queen." That means the vector "female - male" in some sense encodes the syntactic function of gender in the English language, which is pretty cool.

Last edited:

EzekielRaiden

Follower of the Way

The fact that it is confidently wrong, I would assume.X markets Grok as a "humorous assistant." About X Premium

I wonder what the punch line is supposed to be exactly.

Clint_L

Hero

Intentional misspelling? Though, given the context and content, "Grog" bot might be apt. I believe his intelligence score is 6.But seriously, no it's not. It's Twitter's 'Grog' bot which claims to summarise news topics.

Similar Threads

- Replies

- 43

- Views

- 2K

- Replies

- 140

- Views

- 18K

- Replies

- 258

- Views

- 16K

- Replies

- 92

- Views

- 20K

- Replies

- 150

- Views

- 15K

Related Articles

-

WotC WotC posts generative AI FAQ: "We do not allow the use of generative AI in our art"

- Started by Whizbang Dustyboots

- Replies: 43

-

-

-

WotC Updates D&D's AI Policy After YouTuber's False Accusations

- Started by Morrus

- Replies: 92

-

D&D General Allegations of AI Usage Cause unnecessary Controversy

- Started by This Effin’ GM

- Replies: 150